2020. 3. 18. 21:01ㆍ카테고리 없음

Annual Reliability and Maintainability Symposium- February 2009Abstract: Annual RELIABILITY and MAINTAINABILITY Symposium2009 PROCEEDINGSTo cite the Proceedings as a reference: 2009 Proc. Reliability & Maintainability Symp.The Symposium does not copyright this Proceedings itself but uses one of its cosponsors (IEEE)to perform this service and associated administration.Copyright & Reprint PermissionAbstracting is permitted with credit to the source. Libraries are permitted to photocopy beyond the limit ofUS copyright law for the private use of patrons those articles in this Proceedings that carry a code at thebottom of the first page, provided the per-copy fee indicated in the code is paid through:Copyright Clearance Center 路 222 Rosewood Drive 路 Danvers, Massachusetts 01923 USAFor other copying, reprint or reproduction permission, write to:IEEE Copyrights ManagerIEEE Operations CenterPO Box 1331 (445 Hoes Lane)Piscataway, New Jersey USACopyright 漏 2009 by the Institute of Electrical & Electronics Engineers, Inc.

- Ansi Geia Std 0009 Pdf Writer Download

- Ansi Geia Std 0009 Pdf Writer List

- Ansi Geia Std 0009 Pdf Writer Software

All rights reserved.Printed in the United States of AmericaISSN 0149-144XCD ROMISBN: 978-1-4244-2509-9Library of Congress 78-132873IEEE Catalog Number: CFP09RAM-CDRKeywords: RAMS 2009 Proceedings - Reliability Management - Standards.

Since the early 2000s, the U.S. Department of Defense (DoD) has carried out several examinations of the defense acquisition process and has begun to develop some new approaches.

These developments were in response to what was widely viewed as deterioration in the process over the preceding two or so decades.During the 1990s, some observers characterized the view of defense acquisition as one of believing that if less oversight were exercised over the development of weapons systems by contractors, the result would be higher quality (and more timely delivery of) defense systems. Whether or not this view was widely held, the 1990s were also the time that a large fraction of the reliability engineering expertise in both the Office of the Secretary of Defense (OSD) and the services was lost (see Adolph et al., 2008), and some of the formal documents providing details on the oversight of system suitability were either cancelled or not updated. For instance, DoD’s Guide for Achieving Reliability, Availability, and Maintainability (known as the RAM Primer) failed to include many newer methods, the Military Handbook: Reliability Prediction of Electronic Equipment (MIL-HDBK-217) became increasingly outdated, and in 1998 DoD cancelled Reliability Program for Systems and Equipment Development and Production (MIL-STD-785B) (although industry has continued to follow its suggested procedures).A criticism of this standard was that it took too reactive an approach to achieving system reliability goals. In particular, this standard presumed that approximately 30 percent of system reliability would come from design choices, while the remaining 70 percent would be achieved through reliability growth during testing.

During the early to mid-2000s, it became increasingly clear that something, possibly this strategy of relaxed oversight, was not working, at least insofar as the reliability of fielded defense systems. Summaries of the evaluations of defense systems in development in the annual reports of the Director of Operational Testing and Evaluation (DOT&E) between 2006 and 2011 reported that a large percentage of defense systems—often as high as 50 percent—failed to achieve their required reliability during operational test. Because of the 10- to 15-year development time for defense systems, relatively recent data still reflect the procedures in place during the 1990s.The rest of this appendix highlights sections from reports over the past 8 years that have addressed this issue.DOT&E 2007 ANNUAL REPORTIn the 2007 DOT&E Annual Report, Director Charles McQueary provided a useful outline of what, in general, needed to be changed to improve the reliability of defense systems, and in particular why attention to reliability early in development was important (U.S. Department of Defense, 2007a, p. Our analysis revealed reliability returns-on-investment between a low of 2 to 1 and a high of 128 to 1. The average expected return is 15 to 1, implying a $15 savings in life cycle costs for each dollar invested in reliability.

Since the programs we examined were mature, I believe that earlier reliability investment (ideally, early in the design process), could yield even larger returns with benefits to both warfighters and taxpayers. I also believe an effort to define best practices for reliability programs is vital and that these should play a larger role in both the guidance for, and the evaluation of, program proposals. Once agreed upon and codified, reliability program standards could logically appear in both Requests for Proposals (RFPs) and, as appropriate, in contracts.

Industry’s role is key in this area. The single most important step necessary to correct high suitability failure rates is to ensure programs are formulated to execute a viable systems engineering strategy from the beginning, including a robust reliability, availability, and maintainability (RAM) program, as an integral part of design and development. No amount of testing will compensate for deficiencies in RAM program formulation.The report found that the use of reliability growth in development had been discontinued by DoD more than 15 years previously. It made several recommendations to the department regarding the defense acquisition process (p. DoD should identify and define RAM requirements within the Joint Capabilities Integration Development System (JCIDS), and incorporate them in the Request for Proposal (RFP) as a mandatory contractual requirement during source selection, evaluate the bidder’s approaches to satisfying RAM requirements.

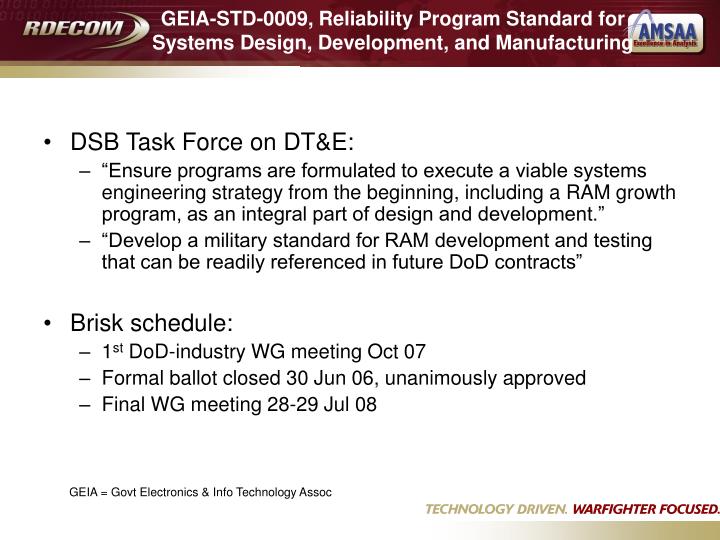

Ensure flow-down of RAM requirements to subcontractors, and require development of leading indicators to ensure RAM requirements are met.In addition, the task force recommended that DoD require (p. the inclusion of a robust reliability growth program, a mandatory contractual requirement and document progress as part of every major program review and ensure that a credible reliability assessment is conducted during the various stages of the technical review process and that reliability criteria are achievable in an operational environment.This report also argued that there was a need for a standard of best practices that defense contractors could use to prepare proposals and contracts for the development of new systems. One result of this suggestion was the formation of a committee that included representatives from industry, DoD, academia, and the services, under the auspices of the Government Electronics and Information Technology Association (GEIA).

The resulting standard, ANSI/GEIA-STD-0009, “Reliability Program Standard for Systems Design, Development, and Manufacturing,” was certified by theThis standard replaced MIL-STD-785, Reliability Program for Systems and Equipment. American National Standards Institute in 2008 and designated as a DoD standard to make it easy for program managers to incorporate best reliability practices in requests for proposals (RFPs) and in contracts.ARMY ACQUISITION EXECUTIVE MEMORANDUMAbout the same time, the Army modified its acquisition policy, described in an Army acquisition executive memorandum on reliability (U.S. Department of Defense, 2007b). The memo stated (p.

1): “Emerging data shows that a significant number of U.S. Army systems are failing to demonstrate established reliability requirements during operational testing and many of these are falling well short of their established requirement.”To address this problem, the Army instituted a system development and demonstration reliability test threshold process. The process mandated that an initial reliability threshold be established early enough to be incorporated into the system development and demonstration contract. It also said that the threshold should be attained by the end of the first full-up, integrated, system-level developmental test event. The default value for the threshold was 70 percent of the reliability requirement specified in the capabilities development document.

Furthermore, the Test and Evaluation Master Plan (TEMP) was to include test and evaluation planning for evaluation of the threshold and growth of reliability throughout system development. Government data and the use of operationally representative environments in early testing.The subsequent report of this working group (U.S. Department of Defense, 2008b) argued for six requirements: (1) a mandatory reliability policy, (2) program guidance for early reliability planning, (3) language for RFPs and contracts, (4) a scorecard to evaluate bidders’ proposals, (5) standard evaluation criteria for credible assessments of program progress, and (6) the hiring of a cadre of experts in each service. The report also endorsed use of specific contractual language that was based on that in ANSI/GEIA-STD-0009 (see above).With respect to RFPs, the working group report contained the following advice for mandating reliability activities in acquisition contracts (U.S. Department of Defense, 2008b, p. The contractor shall develop a reliability model for the system.

Ansi Geia Std 0009 Pdf Writer Download

At minimum, the system reliability model shall be used to (1) generate and update the reliability allocations from the system level down to lower indenture levels, (2) aggregate system-level reliability based on reliability estimates from lower indenture levels, (3) identify single points of failure, and (4) identify reliability-critical items and areas where additional design or testing activities are required in order to achieve the reliability requirements. The system reliability model shall be updated whenever new failure modes are identified, failure definitions are updated, operational and environmental load estimates are revised, or design and manufacturing changes occur throughout the life cycle. Detailed component stress and damage models shall be incorporated as appropriate.The report continued with detailed requirements for contractors (pp. The contractor shall implement a sound systems-engineering process to translate customer/user needs and requirements into suitable systems/products while balancing performance, risk, cost, and schedule. The contractor shall estimate and periodically update the operational and environmental loads (e.g., mechanical shock, vibration, and temperature cycling) that the system is expected to encounter in actual usage throughout the life cycle.

These loads shall be estimated for the entire life cycle which will typically include operation, storage, shipping, handling, and maintenance. The estimates shall be verified to be operationally realistic with measurements using the production-representative system in time to be used for Reliability Verification. The contractor shall estimate the lifecycle loads that subordinate assemblies, subassemblies, components, commercial-off-the-shelf, non-developmental items, and government-furnished equipment will experience as a result of the product-level operational and environmental loads estimated above. These estimates and updates shall be provided to teams developing assemblies, subassemblies. And components for this system. The identification of failure modes and mechanisms shall start immediately after contract award.

The estimates of lifecycle loads on assemblies, subassemblies, and components obtained above shall be used as inputs to engineering- and physics-based models in order to identify potential failure mechanisms and the resulting failure modes. The teams developing assemblies, subassemblies, and components for this system shall identify and confirm through analysis, test or accelerated test the failure modes and distributions that will result when lifecycle loads estimated above are imposed on these assemblies, subassemblies and components. All failures that occur in either test or in field shall be analyzed until the root cause failure mechanism has been identified. Identification of the failure mechanism provides the insight essential to the identification of corrective actions, including reliability improvements. Predicted failure modes/mechanisms shall be compared with those from test and the field. The contractor shall have an integrated team, including suppliers of assemblies, subassemblies, components, commercial-off-the-shelf, non-developmental items, and government-furnished equipment, as applicable, analyze all failure modes arising from modeling, analysis, test, or the field throughout the life cycle in order to formulate corrective actions. The contractor shall deploy a mechanism (e.g., a Failure Reporting, Analysis, and Corrective Action System or a Data Collection, Analysis, and Corrective Action System) for monitoring and communicating throughout the organization (1) description of test and field failures, (2) analyses of failure mode and root-cause failure mechanism, (3) the status of design and/or process corrective actions and risk-mitigation decisions, (4) the effectiveness of corrective actions, and (5) lessons learned.

The model developed in System Reliability Model shall be used, in conjunction with expert judgment, in order to assess if the design (including commercial-off-the-shelf, non-developmental items, and government-furnished equipment) is capable of meeting reliability requirements in the user environment. If the assessment is that the customer’s requirements are infeasible, the contractor shall communicate this to the customer. The contractor shall allocate the reliability requirements down to lower indenture levels and flow them and needed inputs down to its subcontractors/suppliers. The contractor shall assess the reliability of the system periodically throughout the life cycle using the System Reliability Model, the lifecycle operational and environmental load estimates generated herein, and the failure definition and scoring criteria. The contractor shall understand the failure definition and scoring criteria and shall develop the system to meet reliability requirements when these failure definitions are used and the system is operated and maintained by the user. The contractor shall conduct technical interchanges with the customer/user in order to compare the status and outcomes of Reliability Activities, especially the identification, analysis, classification, and mitigation of failure modes. REQUIREMENTS FOR TEMPSAlso beginning in 2008, DOT&E initiated the requirement that TEMPs contain a process for the collection and reporting of reliability data and that they present specific plans for reliability growth during system development.

The 2011 DOT&E Annual Report (U.S. Department of Defense, 2011a) reported on the effect of this requirement, noting that in a survey of 151 programs with DOT&E-approved TEMPs in development carried out in 2010 and focusing on those programs with TEMPs approved since 2008, 90 percent planned to collect and report reliability data. (There have been more recent reviews carried out by DOT&E with similar results; see, in particular U.S.

Department of Defense, 2013.) In addition, these TEMPs were more likely to: (1) have an approved system engineering plan, (2) incorporate reliability as an element of test strategy, (3) document their reliability growth strategy in the TEMP, (4) include reliability growth curves in the TEMP, (5) establish reliability-based milestone or operational testing entrance criteria, and (6) collect and report reliability data. Unfortunately, possibly because of the long development time for defense systems, or possibly because of a disconnect between reporting and practice, there has as yet been no significant improvement in the percentage of such systems that meet their reliability thresholds. Also, there is no evidence that programs are using reliability metrics to ensure that the growth in reliability will result in the system’s meeting their required levels. As a result, systems continue to enter operational testing without demonstrating their required reliability.LIFE-CYCLE COSTS AND RAM REQUIREMENTSThe Defense Science Board (U.S. Department of Defense, 2008a) study on developmental test and evaluation also helped to initiate four activities: (1) establishment of the Systems Engineering Forum, (2) institution of reliability growth training, (3) establishment of a reliability senior steering group, and (4) establishment of the position of Deputy Assistant Secretary of Defense (System Engineering).The Defense Science Board’s study also led to a memorandum from the Under Secretary of Defense for Acquisition, Technology, and Logistics (USD AT&L) “Implementing a Life Cycle Management Framework” (U.S. Department of Defense, 2008c).

This memorandum directed the service secretaries to establish policies in four areas to carry out the following.First, all major defense acquisition programs were to establish target goals for the metrics of materiel reliability and ownership costs. This was to be done through effective collaboration between the requirements and acquisition communities that balanced funding and schedule while ensuring. System suitability in the anticipated operating environment. Also, resources were to be aligned to achieve readiness levels. Second, reliability performance was to be tracked throughout the program life cycle. Third, the services were to ensure that development contracts and acquisition plans evaluated RAM during system design. Fourth, the Services were to evaluate the appropriate use of contract incentives to achieve RAM objectives.The 2008 DOT&E Annual Report (U.S.

Department of Defense, 2008d, p. Iii) stressed the new approach. A fundamental precept of the new T&E test and evaluation policies is that expertise must be brought to bear at the beginning of the system life cycle to provide earlier learning. Operational perspective and operational stresses can help find failure modes early in development when correction is easiest. A key to accomplish this is to make progress toward Integrated T&E, where the operational perspective is incorporated into all activity as early as possible. This is now policy, but one of the challenges remaining is to convert that policy into meaningful practical application.In December 2008, the USD AT&L issued an “Instruction” on defense system acquisition (U.S.

Department of Defense, 2008e). It modified DODI 5000.02 by requiring that program managers should formulate a “viable RAM strategy that includes a reliability growth program as an integral part of design and development” (p. It stated that RAM was to be integrated within the Systems Engineering processes, as documented in the program’s Systems Engineering Plan (SEP) and Life-Cycle Sustainment Plan (LCSP), and progress was to be assessed during technical reviews, test and evaluation, and program support reviews. It stated that (p. For this policy guidance to be effective, the Services must incorporate formal requirements for early RAM planning into their regulations, and assure development programs for individual systems include reliability growth and reliability testing; ultimately, the systems have to prove themselves in operational testing. Incorporation of RAM planning into Service regulation has been uneven.In 2009, the Weapons System Acquisition Reform Act (WSARA, P.L. 111-23) required that acquisition programs develop a reliability growth program.

It prescribed that the duties of the directors of systems engineering were to develop policies and guidance for “the use of systemsSee discussion in Chapter 9. Instruction 5000.02, Operation of the Defense Acquisition System, is available at December 2013.P.L. 111-23 is available at January 2014.

Include a robust program for improving reliability, availability, maintainability, and sustainability as an integral part of design and development, and identify systems engineering requirements, including reliability, availability, maintainability, and lifecycle management and sustainability requirements, during the Joint Capabilities Integration Development System (JCIDS) process, and incorporate such systems engineering requirements into contract requirements for each major defense acquisition program.Shortly after WSARA was adopted, a RAM cost (RAM-C) manual was produced (U.S. Department of Defense, 2009b) to guide the development of realistic reliability, availability, and maintainability requirements for the established suitability/sustainability key performance parameters and key system attributes. The RAM-C manual contains.

RAM planning and evaluation tools, first to assess the adequacy of the RAM program proposed and then to monitor the progress in achieving program objectives. In addition, DoD has sponsored the development of tools to estimate the investment in reliability that is needed and the return on investment possible in terms of the reduction of total life-cycle costs. These tools include algorithms to estimate how much to spend on reliability.

Workforce and expertise initiatives to bring back personnel with the expertise that was lost during the years that the importance of government oversight of RAM was discounted.In 2010, DOT&E published sample RFP and contract language to help assure reliability growth was incorporated in system design and development contracts. DOT&E also sponsored the development of the reliability investment model (see Forbes et al., 2008) and began drafting the Reliability Program Handbook, HB-0009, meant to assist with the implementation of ANSI/GEIA-STD-0009. The TechAmerica Engineering Bulletin Reliability Program Handbook, TA-HB-0009 was released and published in May 2013.On June 30, 2010, DOT&E issued a memorandum, “State of Reliability,” which strongly argued that sustainment costs are often much more. For example, the Early-Infantry Brigade Combat Team (E-IBCT) unmanned aerial system (UAS) demonstrated a mean time between system aborts of 1.5 hours, which was less than 1/10th the requirement. It would require 129 spare UAS to provide sufficient number to support the brigade’s operations, which is clearly infeasible. When such a failing is discovered in post-design testing—as is typical with current policy—the program must shift to a new schedule and budget to enable redesign and new development. For example, it cost $700M to bring the F-22 reliability up to acceptable levels.However, this memo also points out that increases in system reliability can also come at a cost.

A reliable system can weigh more, and be more expensive, and sometimes the added reliability does not increase battlefield effectiveness and is therefore wasteful.The memo also discussed the role of contractors (p. Industry will not bid to deliver reliable products unless they are assured that the government expects and requires all bidders to take the actions and make the investments up-front needed to develop reliable systems. To obtain reliable products, we must assure vendors’ bids to produce reliable products outcompete the cheaper bids that do not.The memo also stressed that reliability constraints must be “pushed as far to the left as possible,” meaning that the earlier that design-related reliability problems are discovered, the less expensive it is to correct such problems, and the less impact there is on the completion of the system. Finally, the memo stated that all DoD acquisition contracts will require, at a minimum, the system engineering practices of ANSI/GEIA STD-0009 (Information Technology Association of America, 2008).TWO MAJOR INITIATIVES TO PROMOTE RELIABILITY GROWTH: ANSI/GEIA-STD-0009 AND DTM 11-003ANSI/GEIA-STD-0009: A Standard to Address Reliability DeficienciesANSI/GEIA-STD-0009 (Information Technology Association of America, 2008) is a recent document that can be agreed to be used as a standard for DoD purposes. It begins with a statement that the user’s needs are represented by four reliability objectives (p.

Test data identifying the system failure modes that will result from life-cycle loads, data verifying the mitigation of these failure modes, updates of the reliability requirements verification strategy, and updates to the reliability assessment.The standard also says that the developer should develop a model that relates component-level reliabilities to system-level reliabilities. In addition, the identification of failure modes and mechanisms shall start as soon as the development begins.

Failures that occur in either test or the field are to be analyzed until the root-cause failure mechanism has been identified. In addition, the developer must make use of a closed-loop failure mitigation process.

The developer (p. Shall employ a mechanism for monitoring and communicating throughout the organization (1) descriptions of test and field failures, (2) analyses of failure mode and root-cause failure mechanism, and (3) the status of design and/or process corrective actions and risk-mitigation decisions. This mechanism shall be accessible by the customer. The developer shall assess the reliability of the system/product periodically throughout the life cycle. Reliability estimates from analysis, modeling and simulation, and test shall be tracked as a function of time and compared against customer reliability requirements. The implementation of corrective actions shall be verified and their effectiveness tracked. Formal reliability growth methodology shall be used where applicable in order to plan, track, and project reliability improvement.

The developer shall plan and conduct activities to ensure that the design reliability requirements are met. For complex systems/products, this strategy shall include reliability values to be achieved at various points during development. The verification shall be based on analysis, modeling and simulation, testing, or a mixture. Testing shall be operationally realistic.For Objective 4, to monitor and assess user reliability, the ANSI/GEIA-STD-0009 directs that RFPs mandate that proposals includes methods for which field performance can be used as feedback loops for system reliability improvement.DTM 11-003: Improving Reliability Analysis, Planning, Tracking, and ReportingAs mentioned above, the deficiency in the reliability of fielded systems may at least be partially due to proposals that gave insufficient attention to plans for achieving reliability requirements, both initially and through testing. This issue is also addressed in DTM 11-003 (U.S. Department of Defense, 2011b, pp.

1-2), which “amplifies procedures in Reference (b) DoD Instruction 5000.02 and is designed to improve reliability analysis. Planning, tracking, and reporting.” It “institutionalizes reliability planning methods and reporting requirements timed to key acquisition activities to monitor reliability growth.”DTM 11-003 stipulates that six procedures take place (pp. 3-4):. Program managers (PMs) must formulate a comprehensive reliability and maintainability (R&M) program using an appropriate reliability growth strategy to improve R&M performance until R&M requirements are satisfied.

The program will consist of engineering activities including: R&M allocations, block diagrams and predictions; failure definitions and scoring criteria; failure mode, effects and criticality analysis; maintainability and built-in test demonstrations; reliability growth testing at the system and subsystem level; and a failure reporting and corrective action system maintained through design, development, production, and sustainment. The R&M program is an integral part of the systems engineering process. The lead DoD Component and the PM, or equivalent, shall prepare a preliminary Reliability, Availability, Maintainability, and Cost Rationale Report in accordance with Reference (c) DOD Reliability, Availability, Maintainability, and Cost Rationale Report Manual, 2009 in support of the Milestone (MS) A decision.

This report provides a quantitative basis for reliability requirements and improves cost estimates and program planning. The Technology Development Strategy preceding MS A and the Acquisition Strategy preceding MS B and C shall specify how the sustainment characteristics of the materiel solution resulting from the analysis of alternatives and the Capability Development Document sustainment key performance parameter thresholds have been translated into R&D design requirements and contract specifications. The strategies shall also include the tasks and processes to be stated in the request for proposal that the contractor will be required to employ to demonstrate the achievement of reliability design requirements. The Test and Evaluation Strategy and the Test and Evaluation Master Plan (TEMP) shall specify how reliability will be tested and evaluated during the associated acquisition phase. Reliability Growth Curves (RGC) shall reflect the reliability growth strategy and be employed to plan, illustrate and report reliability growth. A RGC shall be included in the SEP systems engineering plan at MS A Milestone A, and updated in the TEMP test and engineering master plan beginning at MS B.

RGC will be stated in a series of intermediate goals and tracked through fully integrated. system-level test and evaluation events until the reliability threshold is achieved. If a single curve is not adequate to describe overall system reliability, curves will be provided for critical subsystems with rationale for their selection.

PMs and operational test agencies shall assess the reliability growth required for the system to achieve its reliability threshold during initial operational test and evaluation and report the results of that assessment to the Milestone Decision Authority at MS C. Reliability growth shall be monitored and reported throughout the acquisition process. PMs shall report the status of reliability objectives and/or thresholds as part of the formal design review process, during Program Support Reviews, and during systems engineering technical reviews. RGC shall be employed to report reliability growth status at Defense Acquisition Executive System reviews.CRITIQUEThe 2011 DOT&E Annual Report (U.S.

Department of Defense, 2011a, p. Iv) points out that some changes in system reliability are becoming evident. Sixty-five percent of FY10 TEMPs documented a reliability strategy (35 percent of those included a reliability growth curve), while only 20 percent of FY09 TEMPs had a documented reliability strategy. Further, three TEMPS were disapproved, citing the need for additional reliability documentation, and four other TEMPS were approved with a caveat that the next revision must include more information on the program’s reliability growth strategy.Both ANSI/GEIA-STD-0009 and DTM 11-003 serve an important purpose to help produce defense systems that (1) have more reasonable reliability requirements and (2) are more likely to meet these requirements in design and development. However, given their intended purpose, these are relatively general documents that do not provide specifics as to how some of the demands are to be met. For instance, ANSI/GEIA-STD-0009 (Information Technology Association of America, 2008, p. 2) “does not specify the details concerning how to engineer a system / product for high reliability.

Nor does it mandate the methods or tools a developer would use to implement the process requirements.”The tailoring to be done will be dependent upon a “customer’s funding profile, developer’s internal policies and procedures and negotiations between the customer and developer” (p. Proposals are to include a reliability program plan, a conceptual reliability model, an initial reliability. Flow-down of requirements, an initial system reliability assessment, candidate reliability trade studies, and a reliability requirements verification strategy. But there is no indication of how these activities should be carried out. How should one produce the initial reliability assessment for a system that only exists in diagrams?

Ansi Geia Std 0009 Pdf Writer List

What does an effective design for reliability plan include? How should someone track reliability over time in development when few developmental and operationally relevant test events have taken place? How can one determine whether a test plan is adequate to take a system with a given initial reliability and improve that system’s reliability through test-analyze-and-fix to the required level? How does one know when a prototype for a system is ready for operational testing?Although the TechAmerica Engineering Bulletin Reliability Program Handbook, TA-HB-0009, has been produced with the goal at least in part to answer these questions, a primary goal of this report is to assist in the provision of additional specificity as to how some of these steps should be carried out.REFERENCESAdolph, P., DiPetto, C.S., and Seglie, E.T. Defense Science Board task force developmental test and evaluation study results. ITEA Journal, 29, 215-221.Forbes, J.A., Long, A., Lee, D.A., Essmann, W.J., and Cross, L.C. Developing a Reliability Investment Model: Phase II—Basic, Intermediate, and Production and Support Cost Models.

LMI Government Consulting. LMI Report # HPT80T1. Available: August 2014.Information Technology Association of America. Available: October 2014.U.S. Department of the Army. Pamphlet 73-2, Test and Evaluation Master Plan Procedures and Guidelines. Available: October 2014.U.S.

Department of Defense. FY 2007 Annual Report. Office of the Director of Operational Training and Development. Available: January 2014.U.S. Department of Defense. Memorandum, Reliability of U.S.

Army Materiel Systems. Acquisition Logistics and Technology, Assistant Secretary of the Army, Department of the Army.

Available: January 2014.U.S. Department of Defense. Report of the Defense Science Board Task Force on Developmental Test and Evaluation. Office of the Under Secretary of Defense for Acquisitions, Technology, and Logistics. Available: January 2014.U.S. Department of Defense. Report of the Reliability Improvement Working Group.

Office of the Under Secretary of Defense for Acquisition, Technology, and Logistics. Available: January 2014.The handbook is available at August 2014. Department of Defense. Memorandum, Implementing a Life Cycle Management Framework.

Office of the Undersecretary for Acquisition, Technology, and Logistics. Available: January 2014.U.S. Department of Defense.

FY 2008 Annual Report. Office of the Director of Operational Training and Development. Available: January 2014.U.S. Department of Defense.

Instruction, Operation of the Defense Acquisition System. Office of the Undersecretary for Acquisition, Technology, and Logistics. Available: January 2014.U.S. Department of Defense. Implementation of Weapon Systems Acquisition Reform Act (WSARA) of 2009 (Public Law 111-23, May 22, 2009) October 22, 2009; Mona Lush, Special Assistant, Acquisition Initiatives, Acquisition Resources & Analysis Office of the Under Secretary of Defense for Acquisition, Technology, and Logistics.U.S.

Ansi Geia Std 0009 Pdf Writer Software

Department of Defense. DoD Reliability, Availability, Maintainability-Cost (RAM-C) Report Manual. Available: August 2014.U.S.

Department of Defense. Memorandum, State of Reliability. Office of the Secretary of Defense. Available: January 2014.U.S. Department of Defense. DOT&E FY 2011 Annual Report. Office of the Director of Operational Test and Evaluation.

Available: January 2014.U.S. Department of Defense. Memorandum, Directive-Type Memorandum (DTM) 11-003—Reliability Analysis, Planning, Tracking, and Reporting.

The Under Secretary of Defense, Acquisition, Technology, and Logistics. Available: January 2014.U.S. Department of Defense. DOT&E FY 2013 Annual Report.

Office of the Director of Operational Test and Evaluation. Available: January 2014.